Demystifying CNNs with Grad-CAM

Abstract

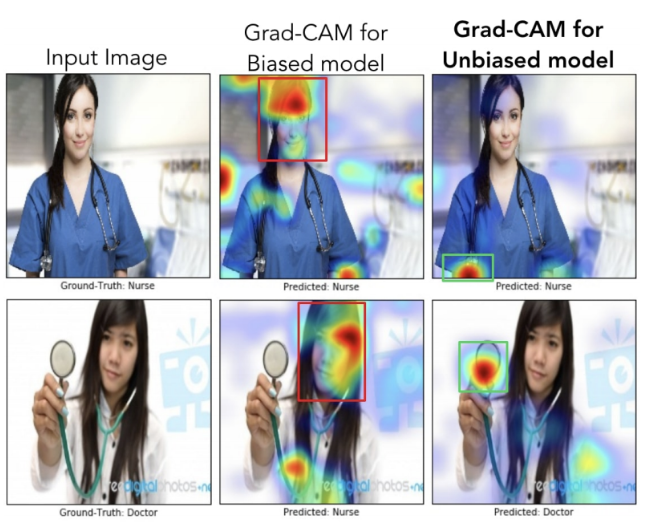

Interpretability in Deep Learning contributes to increasing confidence and transparency in systems based on these techniques. Especially in safety-critical systems such as autonomous vehicles or unmanned aerial vehicles. In this talk, we will understand how to explain the predictions of convolutional neural networks (CNNs) using Grad-CAM. December 2020, The Developer’s Conference, São Paulo - SP, Brazil.

Date

Dec 3, 2020

Location

Remote